HBase | Distributed, Scalable, NoSQL

HBase is an open-source, non-relational, column-oriented distributed database modeled after Google’s BigTable and is written in Java, it runs on top of HDFS. It can be seen as a distributed, multidimensional, sorted map with sparse nature. It provides realtime random read/write access to data stored in HDFS

HBase falls under the "NoSQL" umbrella. Technically speaking, HBase is really more a "Data Store" than "Data Base" because it lacks many of the features we find in an RDBMS, such as typed columns, secondary indexes, triggers, and advanced query languages, etc.

HBase is not a direct replacement for a classic SQL Database, although recently its performance has improved. HBase has many features which support efficient scaling. HBase clusters expand by adding RegionServers that are hosted on commodity class servers (running on the top of Hadoop). If a cluster expands from 10 to 20 RegionServers, for example, it doubles both in terms of storage and as well as processing capacity

Mainly HBase is used to power websites/products e.g. StumbleUpon and Facebook's Messages Storing data that’s also used as a sink or a source to analytical jobs (usually MapReduce)

Before we select HBase in our application, we need to keep the following things in mind:

Basic components of an typical HBase system (end to end)

Like a normal SQL/NoSQL stores we have similar concepts of Tables, rows, columns, cells here:

https://support.pivotal.io/hc/en-us/articles/200950308-HBase-Basics

http://hadoop-hbase.blogspot.se/2013/07/hbase-and-data-locality.html

HBase falls under the "NoSQL" umbrella. Technically speaking, HBase is really more a "Data Store" than "Data Base" because it lacks many of the features we find in an RDBMS, such as typed columns, secondary indexes, triggers, and advanced query languages, etc.

HBase is not a direct replacement for a classic SQL Database, although recently its performance has improved. HBase has many features which support efficient scaling. HBase clusters expand by adding RegionServers that are hosted on commodity class servers (running on the top of Hadoop). If a cluster expands from 10 to 20 RegionServers, for example, it doubles both in terms of storage and as well as processing capacity

Mainly HBase is used to power websites/products e.g. StumbleUpon and Facebook's Messages Storing data that’s also used as a sink or a source to analytical jobs (usually MapReduce)

Before we select HBase in our application, we need to keep the following things in mind:

- We need to make sure that we have enough data. If we have hundreds of millions or billions of rows, then HBase is a good candidate. If we only have a few thousand/million rows, then using a traditional RDBMS might be a better choice due to the fact that all of our data might wind up on a single node (or two) and the rest of the cluster may be sitting idle.

- We need to make sure we can live without all the extra features that an RDBMS provides (e.g. typed columns, secondary indexes, transactions, advanced query languages etc.) An application built against an RDBMS cannot be "ported" to HBase by simply changing a JDBC driver, for example. Consider moving from an RDBMS to HBase as a complete redesign as opposed to a port.

- We need to make sure we have enough hardware; Even HDFS doesn’t do well with anything less than 5 DataNodes. HBase can run quite well stand-alone on a laptop - but this should be considered a development configuration only.

Key Features

- Strongly consistent and random reads/writes.

- Horizontal scalability and Automatic sharding.

- Automatic RegionServer failover.

- Hadoop/HDFS Integration with MapReduce.

- Native Java Client API (HTable).

- Support for Filters, Operational Management.

- Multiple clients like its native Java library. Thrift, and REST.

- Few third party clients are (each of them has it's own advantages) :

- Apache Phoenix (JDBC layer for HBase)

- Stumbleupon Asynchbase (asynchronous, non-blocking)

- Kundera(JPA 1.0 ORM library)

Key Constituents

|

| HBase cluster |

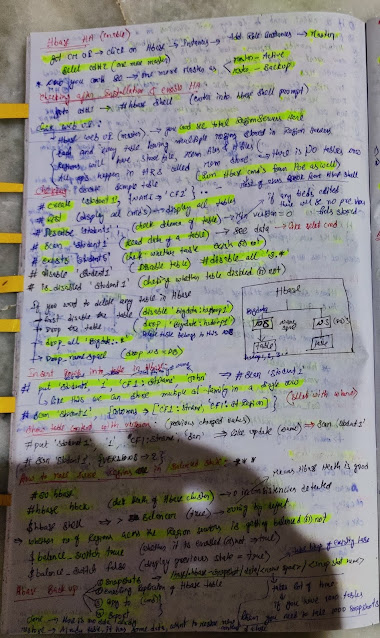

Basic components of an typical HBase system (end to end)

- One Master Server

- The master is responsible for assigning regions to region servers and uses Apache ZooKeeper , a reliable, highly-available, persistent, and distributed coordination service, to facilitate that task.

- The master server is also responsible for handling load balancing of regions across region servers, to unload busy servers and move regions to less occupied ones. In addition, it takes care of schema changes and other metadata operations, such as creation of tables and column families.

- Technically HMaster is the implementation of the Master Server. The Master server is responsible for monitoring all RegionServer instances in the cluster, and is the interface for all metadata changes. In a distributed cluster, the Master typically runs on the NameNode. A cluster may have multiple masters, all Masters compete to run the cluster. If the active Master loses its lease in ZooKeeper (or the Master shuts down), then the remaining Masters jostle to take over the Master role.

- Multiple Region Servers

- In HBase tables are partitioned into Regions. Region defined by start & end row keys, these are basically smallest unit of distribution. Regions are further assigned to RegionServers (also known as HBase cluster slaves), hence Region Servers are responsible for all read and write requests for all regions they serve and also split regions that have exceeded the configured region size thresholds. The region servers can be added or removed while the system is u p and running to accommodate changing workloads.

- Technically HRegionServer is the RegionServer implementation. It is responsible for serving and managing regions. In a distributed cluster, a RegionServer runs on a DataNode. Each Region Server is responsible to serve a set of regions, and one Region (i.e. range of rows) can be served only by one Region Server.

- HBase Clients

- End clients, can be commandline, Java, thrift or REST based. One of my favourite JDBC client is Apache Pheonix (will discuss in later posts).

|

| Region Server Internals |

Like a normal SQL/NoSQL stores we have similar concepts of Tables, rows, columns, cells here:

- The most basic unit is a column.

- One or more columns form a row that is addressed uniquely by a row key.

- A number of rows, in turn, form a table, and there can be many of them hence we it looks similar to that to RDBMS table i.e. rows are comprised of columns, and those are in turn are grouped into column families

- Each column may have multiple versions, with each distinct value contained in a separate cell.

- All rows are always sorted lexicographically by their row key.

- All column members of a column family have a common prefix. For example, the columns courses:history and courses:math are both members of the courses column family. Physically, all column family members are stored together on the filesystem in the same low-level storage files, called HFile. Because tunings and storage specifications are done at the column family level, it is advised that all column family members have the same general access pattern and size characteristics.

- It can be expensive to add new column families so, column families need to be defined when the table is created and should not be changed too often, nor should there be too many. Fortunately, a column family may have any number of columns. Each column family may have

its own rules regarding how many versions of a given cell to keep. All the columns within a column family will share the same characteristics such as versioning and compression (The name of the column family must be printable characters, a notable difference to all other names or values. - Columns are often referenced as family:qualifier with the qualifier being any arbitrary array of bytes. You could have millions of columns in a particular column family. There is also no type nor length boundary on the column values.

- Every column value, or cell, is either timestamped implicitly by the system or can be set explicitly by the user. This can be used, to save multiple versions of a value as it changes over time. Different versions of a cell are stored in decreasing timestamp order, allowing you to read the newest value first.

- The user can specify how many versions of a value should be kept. In addition, there is support for predicate deletions allowing you to keep, for example, only values written in the last week.

- The BigTable model, as implemented by HBase, is a sparse, distributed, persistent, multi dimensional map, which is indexed by row key, column key, and a timestamp. Putting this together, we can express the access to data like so:

(Table, RowKey, Family, Column, Timestamp) => Value

In a more programming language style like in Java, we can represent it as (just to visualize and understand)

SortedMap<RowKey, List<SortedMap<Column, List<Value, Timestamp>>>>

The first SortedMap is the table, containing a List of column families. The families contain another SortedMap, which represent the column and their associated values. These values are in the final List, that holds the value and the timestamp. - Region

- The basic unit of scalability and load balancing in HBase is called a region. These are essentially contiguous ranges of rows stored together. They are dynamically split by the system when they become too large. Alternatively, they may also be merged to reduce their number and required storage files. An HBase system ma y have more than one region servers.

- Initially there is only one region for a table and as we start adding data to it, the system is monitoring to ensure that you do not exceed a configured maximum size. If you exceed the limit, the region is split into two at the middle key middle of the region, creating two roughly equal halves.

- Each region is served by exactly one region server, the row key in the and each of these servers can serve many regions at any time.

- Rows are grouped in regions and may be served by different servers

- Storage API

- The API offers operations to create and delete tables and column families. There are the usual operations for clients to create or delete values as well as retrieving them with a given row key.

- A scan API allows to efficiently iterate over ranges of rows and be able to limit which columns are returned or how many versions of each cell. Matching columns can be done using filters and selecting versions using time ranges.

- The data is stored in store files, specifying start and end times called HFile, the files are sequences of blocks with a block index stored at the end. The index is loaded when the HFile is opened and kept in memory. The default block size is 64KB but can be configured differently if required. The store files provide an API to access specific values but also to scan ranges of values given a start and end key.

- The store files are typically saved in the Hadoop Distributed File System (HDFS), which provides a scalable, persistent and replicated storage layer for HBase. It guarantees that data is never lost by writing the changes across a configurable number of physical servers.

- When data is updated, it is written to a commit log, called write-ahead log in HBase. and then stored in the in-memory memstore.

- Reading & Writing

- Read Path

- As a general rule, if we need fast access to data, we should keep it ordered and as much of it as possible in memory. HBase accomplishes both of these goals, allowing it to serve millisecond reads in most cases. A read against HBase must be reconciled between the persisted HFiles and the data still in the MemStore. HBase has an LRU cache for reads. This cache, also called BlockCache, remains in the JVM heap along with MemStore.

- The BlockCache is designed to keep frequently accessed data from the HFiles in memory so as to avoid disk reads as much as possible. Each column family has its own BlockCache. Understanding the BlockCache is an important part of understanding how to run HBase at optimal performance. The "Block" in BlockCache is the unit of data that HBase reads from disk in a single pass. The HFile is physically laid out as a sequence of blocks plus an index over those blocks, this means reading a block from HBase requires only looking up that block's location in the index and retrieving it from disk.

- The block is the smallest indexed unit of data and is the smallest unit of data that can be read from disk. The block size is configured per column family, and the default value is 64 KB. We may tweak this value as per our usecase. For random lookups a smaller block size will be recommended but smaller blocks creates a larger index and thereby consumes more memory. For more sequential scans, reading many blocks at a time, we should have larger block size. This allows us to save on memory because larger blocking this way we will have fewer index entries and thus a smaller index.

- Reading a row from HBase requires first checking the MemStore for any pending modifications. Then the BlockCache is examined to see if the block containing this row has been recently accessed. Finally, the relevant HFiles on disk are accessed. There are more things going on under the hood, but this is the overall outline.

- Note that HFiles contain a snapshot of the MemStore at the point when it was flushed. Data for a complete row can be stored across multiple HFiles. In order to read a complete row, HBase must read across all HFiles that might contain information for that row in order to compose the complete record.

- Write Path

Whether we use add a new row in HBase or to modify an existing row, the internal process remains the same . HBase recieve the call and and persist the change. When a write is made, by default, it goes to two places:- MemStore

- MemStore is a write buffer(64MB by default). When the data in MemStore accumulates its threshold, data will be flush to a new HFile on HDFS persistently. Each Column Family can have many HFiles, but each HFile only belongs to one Column Family.

- Write-ahead log WAL (also referred to as the HLog)

- WAL is for data reliability, WAL is persistent on HDFS and each Region Server has only on WAL. When the Region Server is down before MemStore flush, HBase can replay WAL to restore data on a new Region Server.

The default behavior of HBase is to write in both places to maintain data durability. Only after the change is written to and confirmed in both the places is the write considered complete. The MemStore is a write buffer where HBase accumulates data in memory before a permanent write. Its contents are flushed to disk to form an HFile when the MemStore fills up. It does not write to an existing HFile instead it forms a new file on every flush. The HFile is the underlying storage format for HBase. HFiles belong to a column family and and a column family can have multiple HFiles. But a single HFile can't have data for multiple column families. There is one MemStore per column family.

Failures are common in large distributed systems, and HBase is no exception. Imagine that the server hosting a MemStore that has not been yet flushed crashes. We'll lose the data that was in memory but not yet persisted. HBase safeguards against that by writing to the WAL before the write completes. Every server that's part of the HBase cluster keeps a WAL to record changes as they happen. The WAL is a file on the underlying file system. A write isn't considered successful until the new WAL entry is successfully written. This guarantee makes HBase as durable as the file backing it. Most of the time, HBase is backed by the Hadoop Distributed Filesystem (HDFS). If HBase goes down, the data that not yet flushed from the MemStore to the HFile can be recovered by replaying the WAL. We don't have to do this manually. It's all handled under the hood by HBase as a part of the recovery process. There is a single WAL per HBase server shared by all tables (and their column families) served from that server.

As we can imagine, skipping the WAL during writes can help improve write performance. There's one less thing to do, right? We don't recommend disabling the WAL unless we're willing to lose data when things fail. In case we want to experiment, we can disable the WAL like this (client APIs will be discussed later):

Put p = new Put();

p.setWriteToWAL(false);

Note that every write to HBase requires confirmation from both the WAL (Write Ahead Log) and the MemStore. The two steps ensure that every write to HBase happens as fast as possible while maintaining durability. The MemStore is flushed to a new HFile when it fills up.

- Read Path

Known Use Cases

- OpenTSDB - The Scalable Time Series Database runs on the top of HBase used for storing massive amount of time series data

- StumbleUpon - Uses HBase to make the best recommendations, since it has to manage a lot of user signals. Every thumb-up, stumble, and share (among other feedback from users) is stored.

- Mozilla - For managing crash reports etc.

- Adobe - Using HBase in several areas from social services to structured data and processing for internal use.

- Explorys uses an HBase cluster containing over a billion anonymized clinical records, to enable subscribers to search and analyse patient populations, treatment protocols, and clinical outcomes.

- Facebook uses HBase to power their Messages infrastructure.

- Openlogic stores all the world ’s Open Source packages, versions, and analytical purposes. files, and lines of code in HBase for both near-real-time access

- Openplaces is a search engine for travel that uses HBase to store terabytes of web pages and travel-related entity records.

- Powerset (a Microsoft company) uses HBase to store raw documents. - Shopping Engine at Tokenizer is a web crawler; it uses HBase to store URLs and Outlinks (more than a billion).

- Tracker uses HBase to store and serve online influencer data in real—time. They use MapReduce to frequently re-score their entire data set as they keep updating inf luencer metrics on a daily basis.

- Twitter runs HBase across its entire Hadoop cluster. HBase provides a distributed, read/write backup of all mysql tables in Twitter's production backend, allowing engineers to run MapReduce jobs over the data while maintaining the ability to apply periodic row updates

- WorldLingo - The WorldLingo Multilingual Archive use HBase to store millions of documents that they scan using Map/Reduce jobs to machine translate them into all or selected target languages from their set of available machine translation languages.

Faliover Scenarios

- DataNode Failover - These are handled by HDFS replication (out of the box as part of Hadoop deployment)

- RegionServer Failover (caused by partial or whole server failure) - This is handled automatically (out of the box feature), HBase Master re-assignes Regions to available Region Servers

- HMaster Failover - In production systems master node failover is made automatic (custom implementation, not out of the box as default deployment) with multiple parallel HMasters

Useful Links

http://www.slideshare.net/enissoz/hbase-and-hdfs-understanding-filesystem-usagehttps://support.pivotal.io/hc/en-us/articles/200950308-HBase-Basics

http://hadoop-hbase.blogspot.se/2013/07/hbase-and-data-locality.html

No comments:

Post a Comment